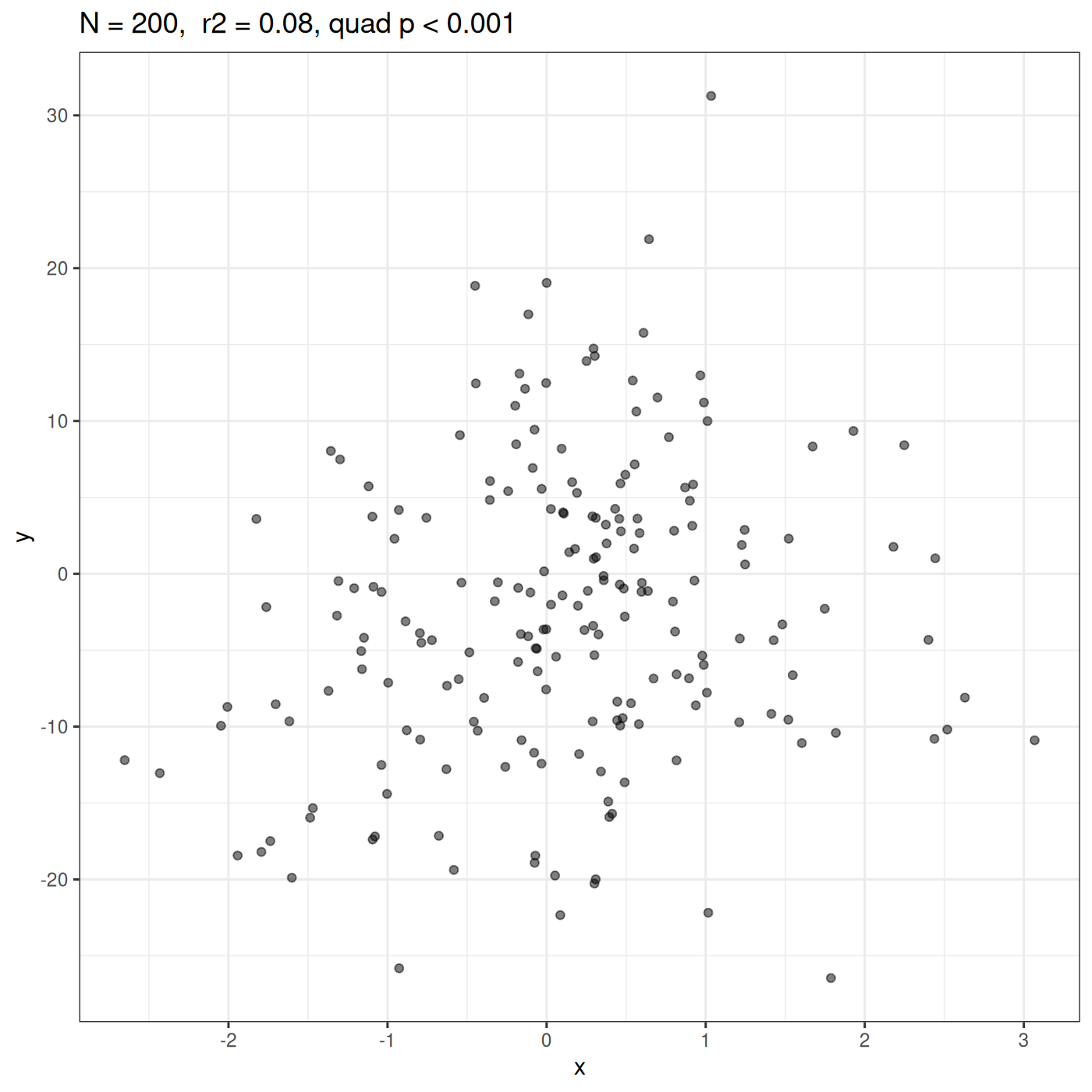

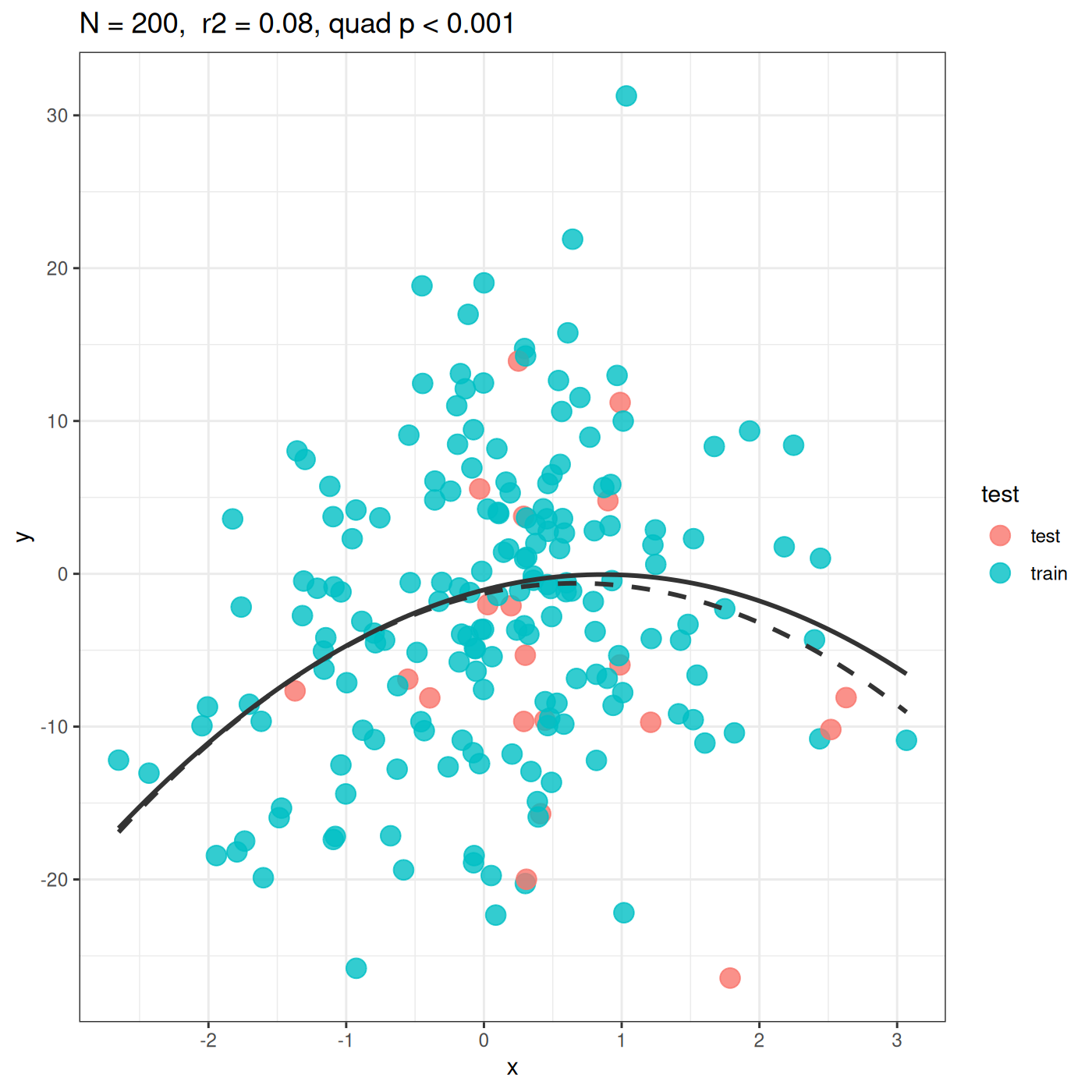

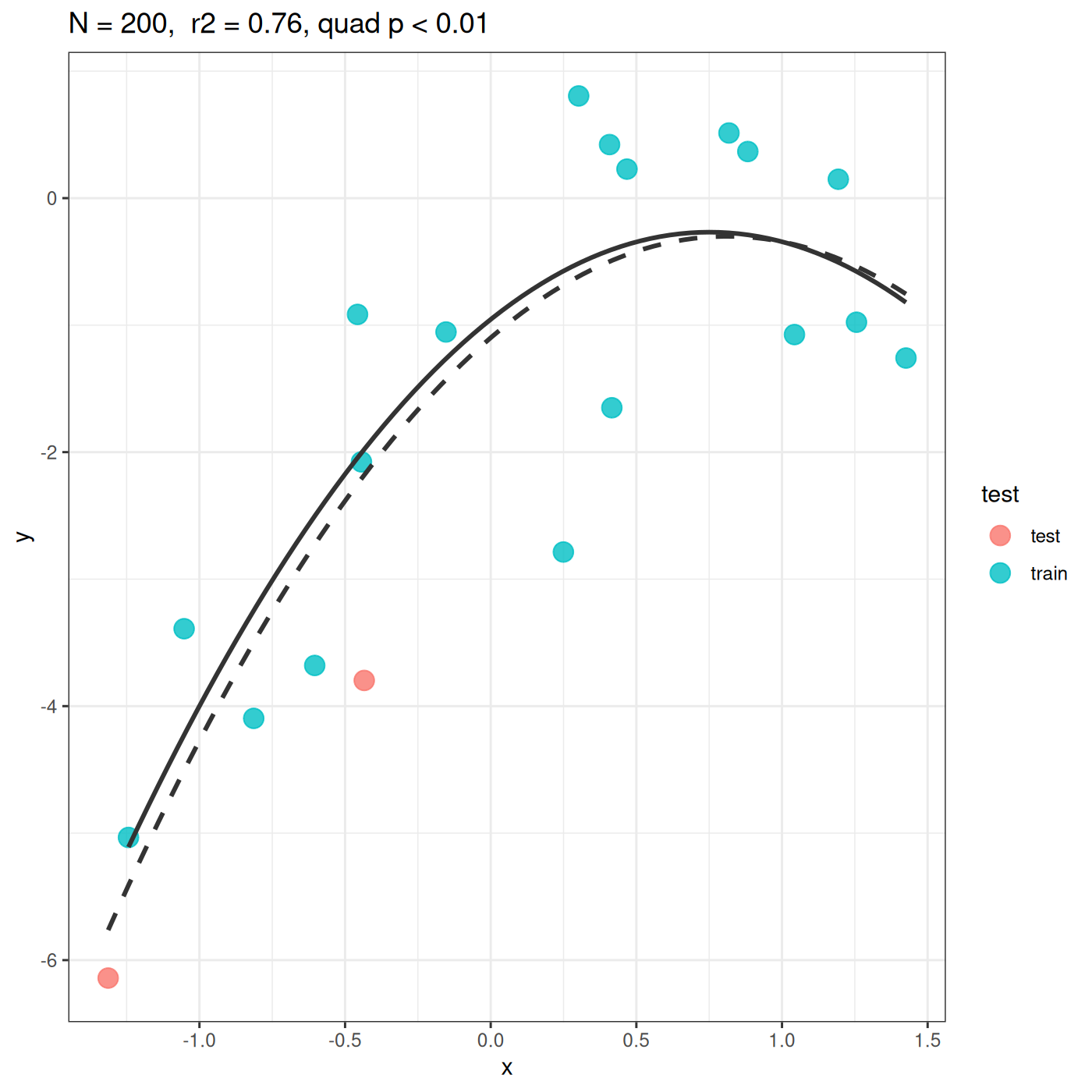

class: center, middle, inverse, title-slide # P-value and/or cross-validation ### Masatoshi Katabuchi ### September 29, 2020 --- # Assuming that - Your experimental design is solid. - Your measurement is biologically meaningful and shows greater variance than the measurement errors. - You know a theory or logic behind the patterns you observed.  Link to the slide. - Code of this slide [https://github.com/mattocci27/pval](https://github.com/mattocci27/pval) --- class: center, middle # Problems of p-values in linear and non-linear models --- class: center, middle # P-values sometimes get very small when there is no meaningfull pattern <img src="images/summay_plt.png" alt="drawing" width="450"/> --- # D. Large sample size with no clear pattern .pull-left-40[ <!-- --> ] .small[ .pull-right-60[ ``` ## ## Call: ## lm(formula = y ~ x + I(x^2), data = dat4) ## ## Residuals: ## Min 1Q Median 3Q Max ## -24.010 -6.456 0.004 6.009 32.078 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -1.2873 0.7917 -1.626 0.105535 ## x 1.9848 0.6677 2.973 0.003320 ** ## I(x^2) -1.4718 0.4316 -3.410 0.000788 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 9.355 on 197 degrees of freedom ## Multiple R-squared: 0.08195, Adjusted R-squared: 0.07263 ## F-statistic: 8.792 on 2 and 197 DF, p-value: 0.0002201 ``` ]] --- class: center, middle # One solution: Cross-validation --- ## Idea Estimating how accurately a model can predict a new data set. .pull-left-50[ <!-- --> ] .small[ .pull-right-50[ 1. Use subset of the data (e.g., 90 %) to make a regression line (blue points, solid line) 2. Quantify how much this blue line can predict the data that were not used to make the model (red points). For example, we can calculate `\(r^2\)`. 3. Repeat 1-2 until you evaluate all the data. 4. Finally, calculate the mean of the each `\(r^2\)` (cross-validated `\(r^2\)`) - In this example, each `\(r^2\)` = -0.08, -0.05, -0.2, -0.12, -0.1, -0.09, 0.07, -0.04, 0.18, 0.12 - `\(r_{CV}^2\)` = -0.04 - When the model is very bad, `\(r^2\)` can be negative. ]] --- Small sample size is a problem too. .pull-left-50[ <!-- --> ] .small[ .pull-right-50[ - Based on 10-folds cross-validation, `\(r_{CV}^2\)` = 0.08, which is much smaller than `\(r^2\)` = 0.76 - If we use a liner model, `\(r_{CV}^2\)` = 0.37 - A linear regression is more meaningfull or useful than a quadratic regression in this example. - Reporting `\(r_{CV}^2\)` (and a figure) is more fair than reporting *P*-value alone and a table. ]] --- # Conclusion - Reporting *P*-value alone is a problem. - Presenting indices that assess the quality of predictors (e.g., `\(r 2\)`) is more kind for readers. - Model validation is important, especially when the model is complicated - Multiple regressions - General additive models... - Draw plots when it's possible - Example for cross-validation: - Katabuchi, M., S. J. Wright, N. G. Swenson, K. J. Feeley, R. Condit, S. P. Hubbell, and S. J. Davies. 2017. Contrasting outcomes of species- and community-level analyses of the temporal consistency of functional composition. Ecology 98:2273–2280.[https://doi.org/10.1002/ecy.1952](https://doi.org/10.1002/ecy.1952)